Monitor errors in experiments with custom filters for arrays

When releasing new features or conducting experiments, it is always a good idea to let a subset of users try them before rolling them out to everyone. In addition to assessing if the feature is working as intended, this approach allows you to check whether an individual feature or experiment is introducing new errors in your application. The last thing you want is customers abandoning your app and seeking out alternatives after experiencing a crash or a poor interaction, because of errors introduced by the latest features.

Testing and monitoring

Two common strategies are used for managing the implementation of new features, namely experiments and feature flags. They are usually run in mobile- and client-side applications such as those built in Android, iOS, React Native, Expo, and Unity.

- Experiments can be simple A/B tests or contain multiple variants so the variant which has the best impact can be selected. An example is experimenting with different styles of a sign-up button to see the effect on conversions and then using the most successful style in a general rollout.

- Feature flags reduce risks by managing the rollout of a new feature to a limited audience to monitor its impact. For example, perhaps only one percent of users see a new feature on the first day. Then, if all goes well, ten percent see the feature the next day, and so on.

Both experiments and features flags provide the ability to monitor error rates, which is an important metric when deciding whether to continue with a feature rollout or picking a variant in an experiment as the winner. New features should meet your standards for stability before they reach all of your users.

New custom filters for arrays

Custom filters in Bugsnag allow you to search for events by custom metadata you’ve added to your error reports.

While you previously needed to create a new custom filter for each experiment in Bugsnag, it’s now easy to monitor errors happening in your experiments, A/B tests, and feature flags with custom filters for arrays.

This new capability allows you to monitor errors across all of your experiments without requiring extra setup or configuration in Bugsnag every time you start a new experiment.

Additionally, it’s more efficient to discover and fix problematic issues introduced by a new feature or experiment. Perhaps best of all, standards for stability are upheld since it’s easy to confirm that the best segment of your experiment isn’t going to reduce the stability of your app.

How to get started

Three simple steps will put you on your way to monitoring errors in your experiments.

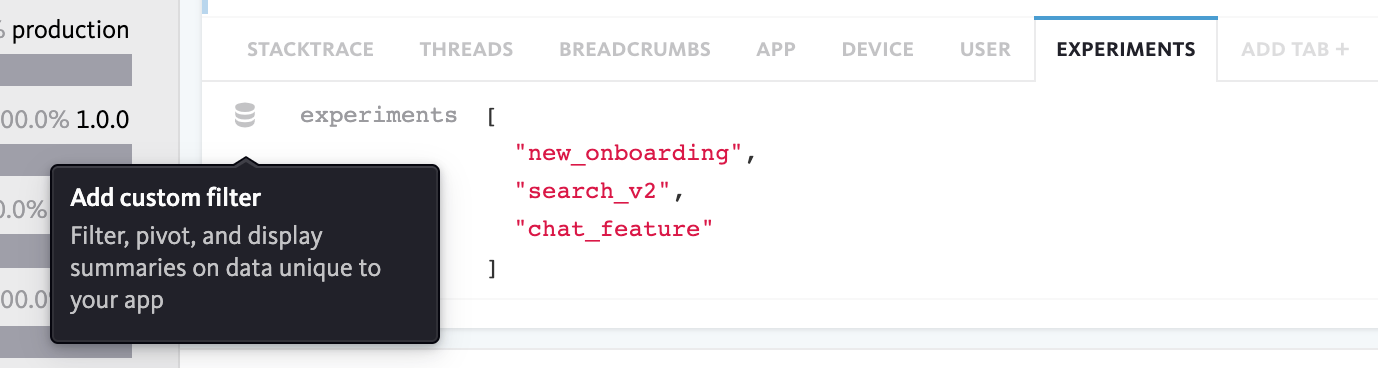

Step 1: Add metadata to your error reports recording the list of experiments or A/B tests that the user is a part of. For example, using the Android notifier:

Bugsnag.beforeNotify { error ->

error.addToTab("experiments", "experiments", arrayOf("new_onboarding", "search_v2", "chat_feature"))

true

}

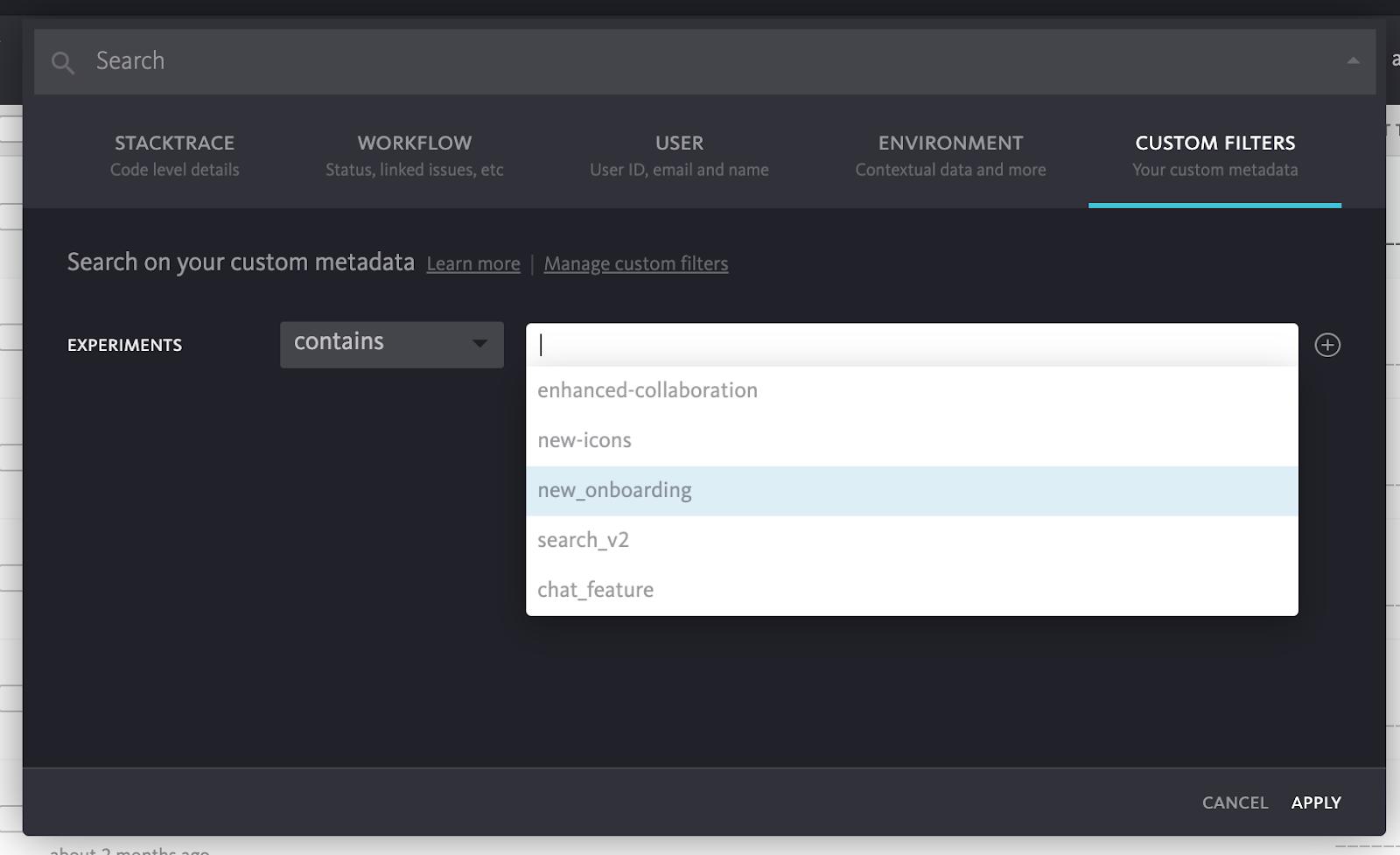

Step 2: In the Bugsnag dashboard, create a custom filter for the experiment metadata field.

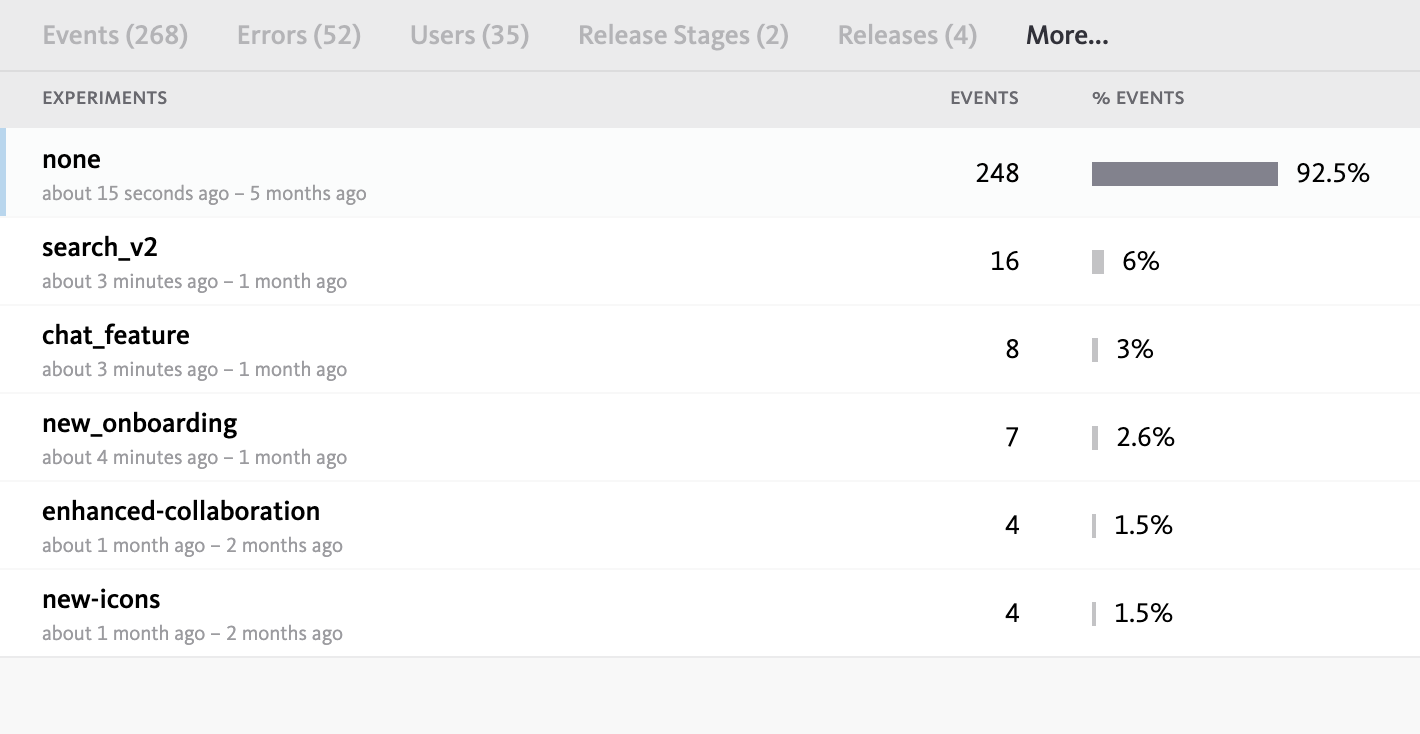

Step 3: Use the filter bar to show only those errors that affect users in an experiment. You can also view a breakdown of active experiments across all instances of an error in pivot tables.

Once the custom filter is set up for the array, any new experiments are automatically available in Bugsnag for filtering and pivot tables.

One custom filter for arrays per project can now be enabled on Bugsnag’s Enterprise plan to support 100 active experiments per user at any one time. Customers on the Enterprise plan should contact us to get started.

Coming soon

Stay tuned! We plan to continue to improve support for measuring the stability of experiments in the future. Until then, we hope you benefit from running experiments and using feature flags while monitoring errors with Bugsnag.