SDKs should not crash apps — learnings from the Facebook outage

Facebook iOS SDK Shuts Down

Last evening, a GitHub issue reported a change to the Facebook API causing crashes to happen on many (possibly every) request made from Facebook’s iOS SDK. This meant that every application which uses the iOS Facebook SDK experienced crashes, leading to major outages worldwide. Since this was a bug that was already present in the Facebook SDK and was triggered by a change in behavior in the Facebook API, these crashes happened immediately and simultaneously. This was an unexploded landmine.

Impact on mobile apps

Most major consumer applications have some kind of Facebook integration, for example “Log in with Facebook” or “Share on Facebook”. Since many applications are deeply integrated with the Facebook SDK, this bug made such applications crash on every boot – effectively causing a total outage for these applications. Techcrunch reported that Spotify, Pinterest, and TikTok were all down.

Since we’re a widely used product that captures crash reports, we know from our data that several popular mobile apps were affected and saw a huge increase in crash volume as a result.

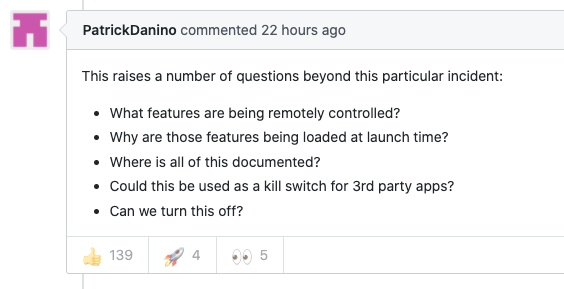

Customer A: Consumer Mobile App

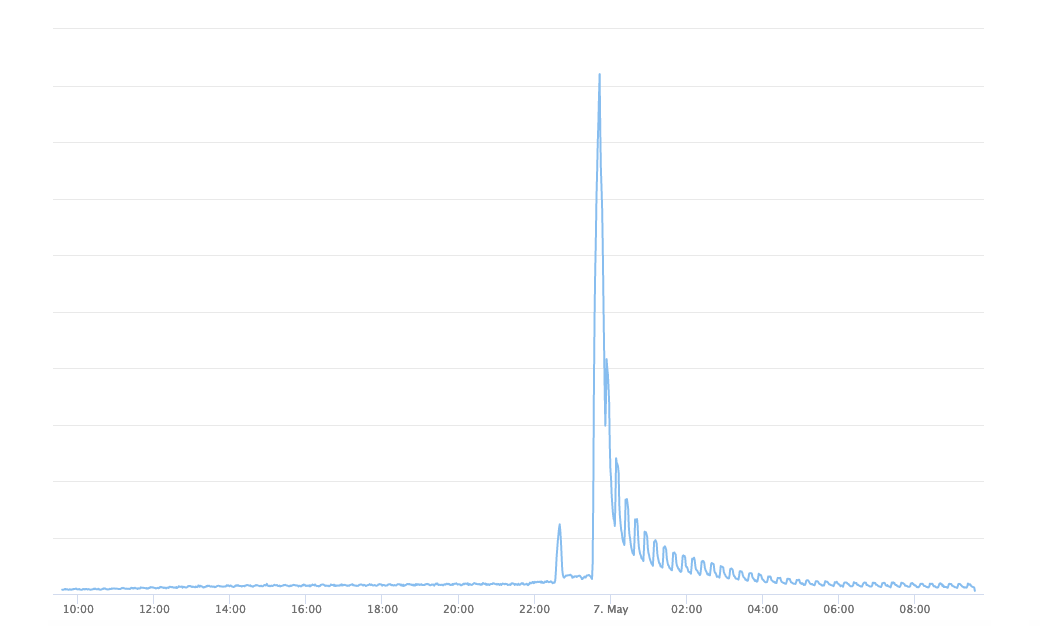

Customer B: Ecommerce App

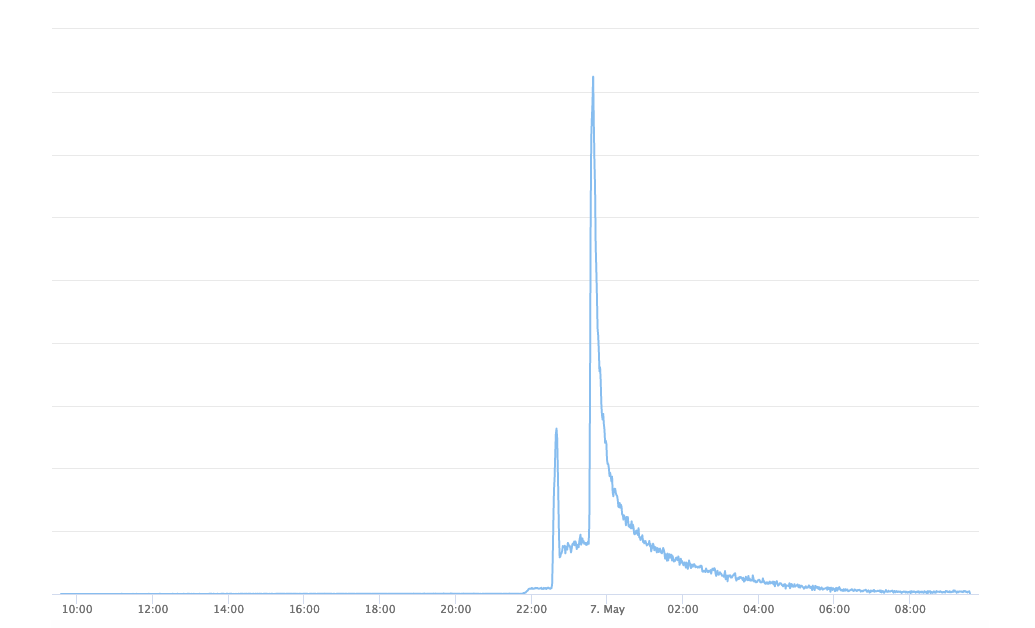

Customer C: Gaming App

Identifying the Root Cause

- We’re still waiting on an official post-mortem write up from Facebook on this (hopefully one is planned) but from what we can ascertain, this outage was caused by a change in the format of an API response from the Facebook API

- When the Facebook SDK begins initializing, it fetches “configuration data” from the Facebook’s servers. This configuration data allows you to configure how the Facebook SDK works using the Facebook website, rather than having to hard-code configuration at build time. This will be a familiar approach for anyone using feature flags or using phased rollouts

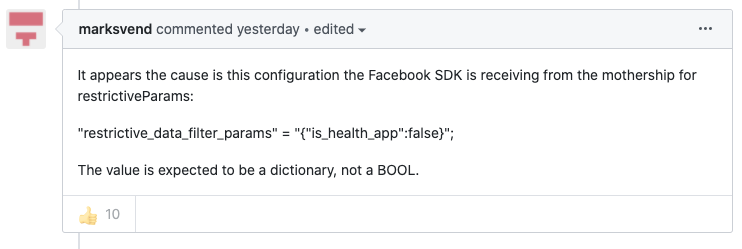

- The data type of a particular property (“restrictive_data_filter_params.is_health_app”) suddenly changed in the Facebook API response from a dictionary to a bool, causing a crash.

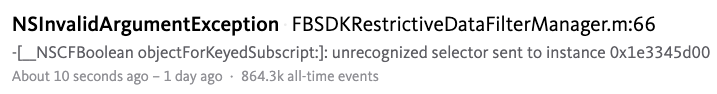

- The crash we saw was this one

- Different variations of this error (sometimes with different line numbers due to different versions of the Facebook SDK being used) made up the entire spike of errors we saw on our end

- You can see the format that was expected in the Facebook SDK source code here

- This was also noticed by marksvend on GitHub

- When your code is expecting a dictionary, but receives a boolean, bad things happen — in this case, the entire application crashes. Even worse, since the operation of fetching configuration from Facebook’s server happened on application boot, this meant that people’s applications were crashing ON BOOT.

- Additionally, the only reference to the “restrictive_data_filter_params” I could find in Facebook’s graph api reference shows this as a “string” data type — further confusing the issue as to what data type was expected.

Some key takeaways

- Now the issue is, this absolutely should not crash an application. One of the tenets of good SDK design is that SDKs SHOULD NEVER CRASH THE APP.

- Defensive programming, and better handling of malformed data from the server could have meant that instead of crashing the application, the facebook initialization could have just been skipped, or better still, fall back to some kind of default settings if the server responds with junk data.

- Additionally, by having some kind of API data validation in place, this situation could have been avoided entirely. Services like Runscope offer this.

Bugsnag’s Elastic Queuing Infrastructure

Bugsnag’s systems dealt with this by buffering the huge volume of additional crash reports without dropping them. Once our systems quickly scaled to handle the additional load, and once Facebook rolled back the change that caused the bug, we were able to completely process the backlog of events.

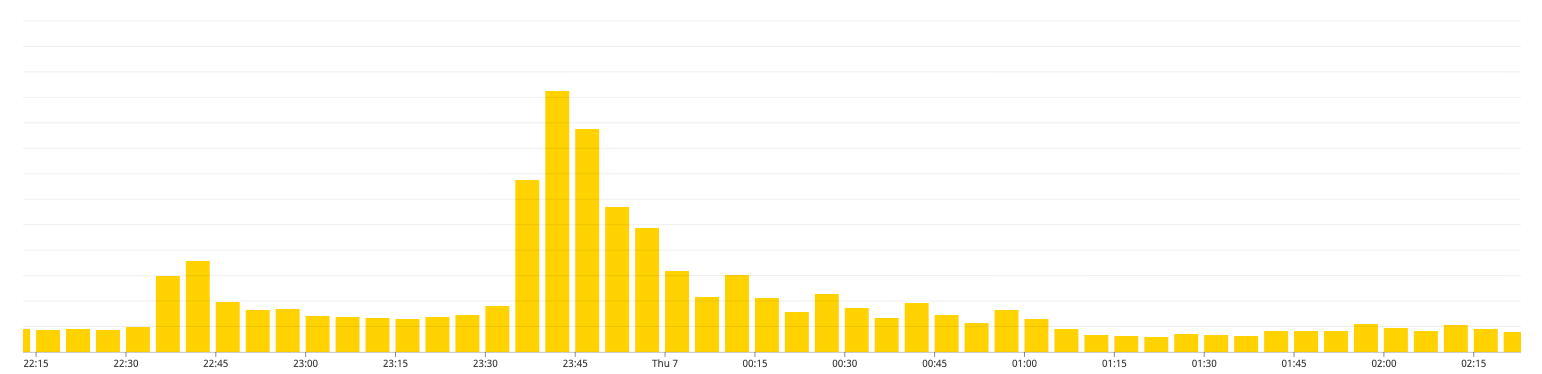

Between 2020-05-06 22:36 UTC and 2020-05-07 02:21 UTC we had delays processing events due to a large increase in event reports from multiple projects simultaneously. The delay was most significant on what we call our “MachO“ queue (where we push events from Apple platform projects: iOS, macOS, tvOS etc). Even with a significant increase in volume causing delay in processing, we were still able to process the majority of events within 5 minutes.

How it Unfolded Across the App World

Considerations for the Community

A lot has happened in the last 24 hours and several of us are working on fixes, retros, and pre-emptive planning for when something like this happens again. Just a few weeks ago, an almost identical issue happened with the Google Maps iOS SDK, which affected Doordash, Uber Eats, and many other apps which rely on maps.

The silver lining about such outages is that it draws attention to good software design and process. It rightly showcases where we need to introduce new best practices or where we may need to fine tune existing ones.

As a community there are some key questions that need to be answered. Patrick Danino from IMDb said it best.